Building Intuition for LSTMs

Simplified introduction to a class of neural networks used in sequential modelling

Let’s say you want to go for a nice dinner with your family. You want to choose a perfect place. You think of a restaurant and spend some time to read up reviews online. One of them reads:

As you sift through the review, you remember some of the keywords like “worst service”, “wrong orders”, “never be back”.

Then you read a couple of other reviews like

Now you tend to forget some information like “worst service” and note the words like “great experience”, “fresh ingredients”, “good swirls” etc.

After you have read enough reviews, you tend to have some information regarding the restaurant. When someone asks you why you chose the place, you would not remember the exact words you read, but you will be able to tell them that the place has fresh ingredients, quick service most of the time. You may remember some bad things people said about it too.

What we have seen in this simple example is a framework of how we process a series of information (time series, if you will). Every time you receive a new data sequence, you forget some of the old stuff, you add some of the new stuff and you form your opinion.

I’ll take the liberty to extend this line of thought — given that you have read a reasonably large (training set) number of reviews, you can also write a review; even though you have never visited the restaurant. In short, you can produce new data set based on previous data samples.

This is what an LSTM network is used for. It stands for Long Short Term Memory. The naming is indeed weird. Let me try to explain where the term comes from.

From reading restaurant reviews to building powerful networks: LSTM

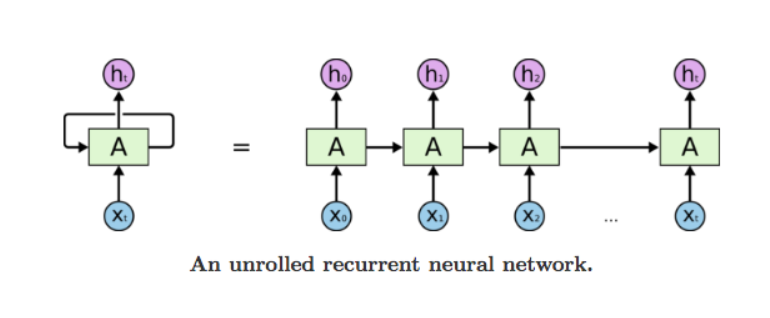

LSTM is a type of RNN (Recurrent Neural Network). An RNN is a network which takes a new input every time (one review at time) and does some processing for each input. Computationally this processing is exactly same for all the inputs, hence the name recurrent. In a real time language translation system, this input is voice samples. In a stock market prediction system, it is a series of share prices. Structure of a typical RNN is shown in the image below. X0, X1,…Xt are continuous inputs. A represents what processing it does. h0, h1,…ht represents an output at that time. Network also has a hidden state or cell state (your opinion of the restaurant).

Traditional RNNs suffer from having short term memory. They cannot remember the context from too long ago. Think of it as you read more reviews you keep forgetting what you read 2–3 reviews back. You may not be able to make a good decision.

With LSTMs, this problem is solved by applying a forget function and an input gate function. Which defines how much of old information you want to forget and how much you want to accept from the new information. This gives you much more control over how you keep the long term memory.

The more technical aspects of LSTM are tackled in this article.

LSTM Applications

There are a lot of cool things that LSTMs can do. If you train an LSTM network with a lot of Shakespeare text, it can start producing prose which reads like Shakespeare. This example text was generated by an LSTM network [reference]:PANDARUS:

Alas, I think he shall be come approached and the day

When little srain would be attain’d into being never fed,

And who is but a chain and subjects of his death,

I should not sleep.Second Senator:

They are away this miseries, produced upon my soul,

Breaking and strongly should be buried, when I perish

The earth and thoughts of many states.

You can generate music, forecast stock prices, predict text, autocomplete your emails and do lots of interesting stuff with LSTMs. We can also combine CNNs and LSTMs. For example, CNNs are very good at extracting features from images. These features can be fed into LSTMs to get captions for those images.

Not everything in life is perfect, nor are LSTMs. Like any AI algorithm, results are as good as the quality of training set and extent of training.

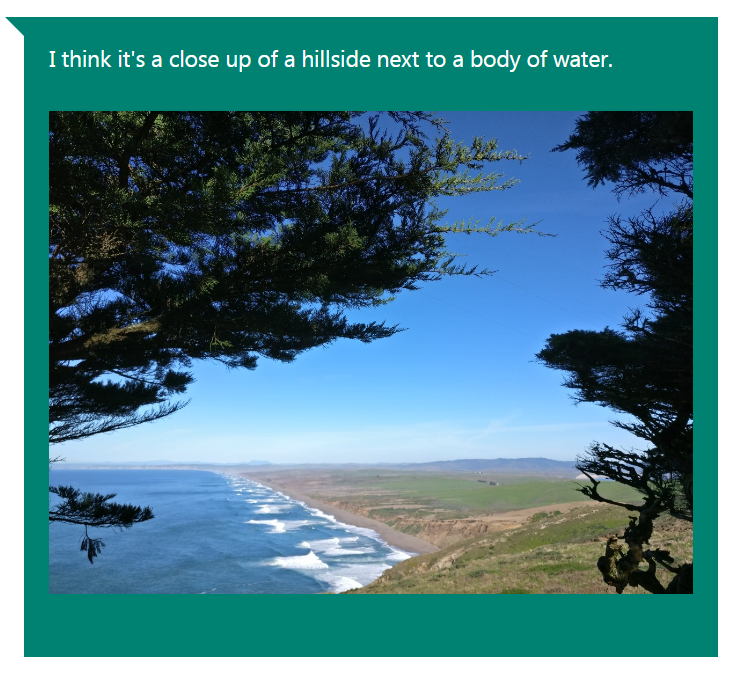

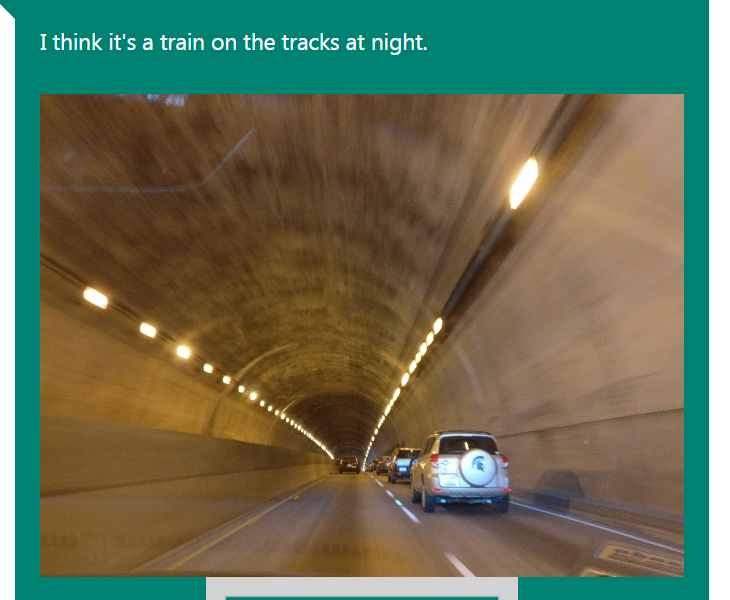

Microsoft research has created this Image Captioning Bot. Try it out for yourself and have fun!

I hope this short introduction has given you a high level idea of what LSTMs are used for. If you are interested in more LSTM applications in action, you can read this excellent article.

X8 aims to organize and build a community for AI that not only is open source but also looks at the ethical and political aspects of it. More such simplified AI concepts will follow. If you liked this or have some feedback or follow-up questions please comment below

Member discussion